Using computer vision to segment photos of bookshelves

This year, Albin and I moved our personal libraries from three different countries to one place. The final move involved a flight where I checked more than 100lbs of books!

Before the move, I cataloged our libraries. I started by getting photos of our bookshelves and stacks of books. I segmented the photos into regions corresponding to individual books. Then I labeled the segments to produce a catalog of our books. I used the project as an excuse to try using computer vision: I detect lines to detect books and I try using OCR to read titles from book spines.

The image below shows a segmented stack of books.

(Heads up, I did the project a few months ago and hacked it together, so this post is high-level and doesn’t contain as much code as my other posts!)

Bookshelf segmentation

To segment bookshelves, I used skimage, a Python image processing toolbox. I augmented the computer vision with plenty of manual help.

Book edge detection

The skimage documentation for Straight line Hough transform gives a good starting point for how to detect lines in images. Line detection is broken into two steps:

- Preprocess the image, downsampling and converting to grayscale.

- Detect edges using the Canny edge detector as implemented by

skimage.feature.canny. - Try to find lines in the image using the Hough transform as implemented by

skimage.transform.hough_line.

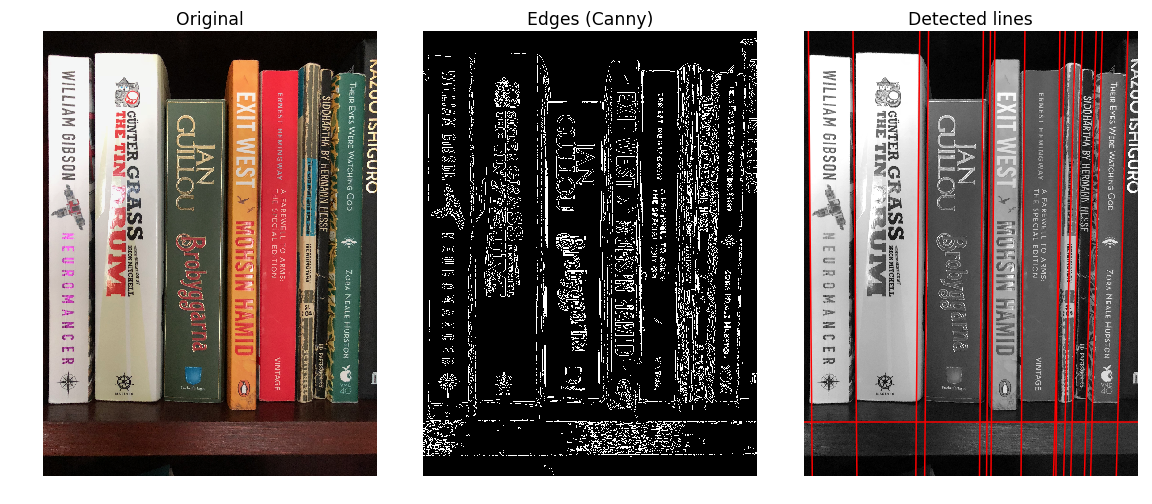

Below is an image that shows how this worked for bookshelves. I start with the original image of the bookshelf (left). I downsample, convert to grayscale, and then run Canny detector to find edges (center). Finally, the code extracts lines using skimage.transform.hough_line_peaks (right).

Out of the box, the algorithm works well. It detects the edges separating books and the edge of the shelf. However, it doesn’t detect the top or bottom edge of the books. For this reason, I just focused on separating books from one another instead of trying to find a closely cropped segment.

Lines to book segments

I define a book segment as four vertices on an image of a bookshelf that corresponds to a physical book. The polygon comes from a pair of vertical lines from the lines detected above. Below is an example of a segmented image.

Even if I only focus on vertical lines, I run into a few issues. For example, the code detects the creases in the spine of old paperback books (see image below). Other times, the code sometimes doesn’t detect the edge between books.

To solve the issues of missing and extra lines, I could probably use heuristics and refine the line detection. Instead, I just wrote code to pick a pair of lines and manually tell it whether the lines show a book segment.

The process that worked pretty well was to start with the left-most line and pick the first line to the right that is mostly parallel to the line. In the case of a misplaced line (like above in Hemingway), I could manually choose to skip that line. For the cases where an edge is missing, I have an option to draw a line that bisects the quadrilateral.

Labeling Segments with OCR

After segmenting photos into book polygons, I wanted to roughly label each book its title and author.

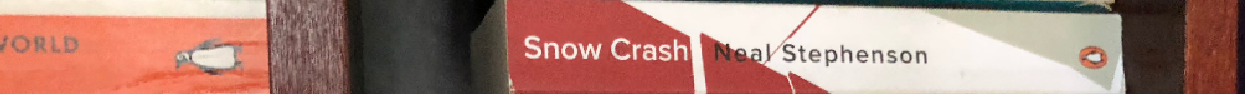

I tried using the library Tesseract to automatically detect text. I started by rotated and cropped the image to the segments above (see below).

To attempt to solve the problem of text at different angles, I tried running Tesseract on the images four times, each rotated 90°. Sometimes, one of the OCR outputted something that looked like authors or titles. For example, it detected the following text from the image.

| Angle | OCR output |

|---|---|

| 0° | Neal Stephenson |

| 90° | :omcwzamum .mmz |

| 180° | uosueqdazg wan |

| 270° | 205. wamcrmamo: |

The above example shows how Tesseract would detect garbage text when the image was rotated the wrong way. It also shows that even though Tesseract detected the author, “Neal Stephenson”, it missed the book title.

I could probably improve Tesseract’s performance using something like these tips. Other techniques might be useful: book spines have weird colors, paperbacks have creases, the text is at different angles, and the image isn’t cropped around the text.

For this project, hand-labeling the rest of the book segments was quick enough for me not to bother improving OCR.

Etc

I used the problem of creating a catalog from images of bookshelves to see how computer vision could work in a real project. Now that I have an idea of computer vision challenges, I think it would help to learn more about computer vision in general (When should I use different preprocessing and edge detectors? What are the algorithms doing and how can I adjust the parameters?)

See Also

- Try the Transcribe task from this cool Library of Congress annotation tool to see more examples of what mistakes OCR make!