Bayesian Linear Regression part 1: plotting samples from the weight prior

I took Iain Murray’s Machine Learning and Pattern Recognition course this fall. It was fun and challenging. I often studied by coding demos, and some of them are kinda cool and I wanted to share them. I’m going to start with something that shows up a few times: priors on linear regression parameters. This is based on this lecture.

Quick review: least squares linear regression that finds a single optimal fit to observations by reducing the least squares error.

Instead of finding a single fit, Bayesian linear regression model gives a distribution over all possible fits. It’s a little weird to think about. One component of Bayesian Linear Regression is a prior on the linear regression parameters. If I have a linear model

instead of setting a single value for \(w\) and \(b\), I could say \(w\) and \(b\) are normally distributed with means \(\mu_w\) and \(\mu_b\) and variances \(\sigma_w^2\) and \(\sigma_b^2\).

To find those parameter distributions in Bayesian land, I’d start with a prior distribution that says what kinds of functions I’d expect before seeing any data. Then I’d combine that with my observations in the form of the likelihood. The result is the posterior distribution, which is what I’d predict based on.

In this post, I’ll just show how to sample a couple \(w\) and \(b\) from a 2D Gaussian distribution and plot them.

Sampling from the prior

Here’s some code that samples from a prior distribution on parameters.

# Set up the values to be plotted

grid_size = 0.01

x_grid = np.arange(-5, 5, grid_size)

D = 2 # There are two parameters

# Augment x_grid with 1s to handle the bias term

X = np.vstack((

np.ones(x_grid.shape[0]),

x_grid

)).T

# How many functions to sample from the prior

line_count = 200

# Set the mu and sigma for the Gaussian on the parameters

mu_w = 0

mu_b = 0

sigma_w = 1.0

sigma_b = 1.0

w_0 = np.hstack([mu_b, mu_w])

V_0 = np.diag([sigma_b, sigma_w])**2

# Phew. Here's the part where I sample from the prior!

w = np.random.randn(line_count, D) @ V_0 + w_0

y = X @ w.TExamples

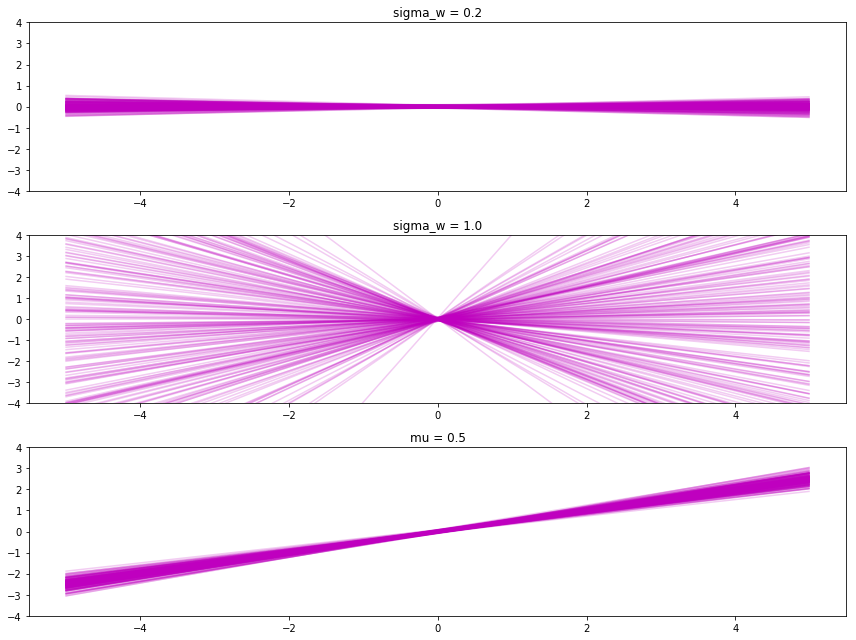

Adjusting sigma_b changes the most likely y-intercept. A small sigma_b makes more points go through the origin (top), and a large sigma_b makes points spread out more. And by setting the \(\mu\), it makes the slopes and intercepts usually near that value.

# set up helper code

def sample_prior_weights(x_grid, mu_w=0, mu_b=0, sigma_w=0.2, sigma_b=0.2):

X = np.vstack((

np.ones(x_grid.shape[0]),

x_grid

)).T

w_0 = np.hstack([mu_b, mu_w])

V_0 = np.diag([sigma_b, sigma_w])**2

w = np.random.randn(line_count, D) @ V_0 + w_0

return X @ w.T

What’s next?

Observations, posteriors on the weights, and then plotting predictions based on it!

Next

See Also

- The MLPR is lots of fun.

- Katarina’s post helped me get mathjax in Jekyll!